Language is for writing is for thought

March 11 2023

Kate Manne, over on her lovely substack:

I write in this space in order to put ideas out there quickly, often in an unpolished form. I like the idea of sometimes making my mistakes in a relatively open forum. Unlike an article, or a book, which are years and years in the making, this substack newsletter capitalizes on my strong desire to write, sometimes quickly: to seize a few hours or even minutes here and there, and try to articulate ideas which have sometimes nagged at me for a decade or more without ever reaching fruition. By and large, I think by writing.

All my agonizing over what this blog is for, and what I should write about, and why, and someone else puts it down in better words than I ever could. Like it does for her, writing clarifies and helps me think. I don’t however, think by writing right now — I would, however, like to. I aspire for it, for its own sake.

The Bridges of Venkat County

March 3 2023

I’ve burnt a few bridges in my short life. Some bridges have water under them, but most don’t. I was obsessed with idioms when I started writing this post — perhaps because the reading for the research seminar that week was on Construction grammars, where idioms (and constructions of all kinds) are as essential as syntactic categories, lexical items and morphemes. I might be misunderstanding construction grammar considering I have only read a few papers about it, but I’ll take this opportunity regardless to overuse various constructions to death, violating one of Orwell’s maxims.

It was only recently that I realized that sometimes, frayed and broken relationships cannot be fixed. I could apologize, make up for my mistakes and shortcomings, undo all the harm I’ve caused, and yet. I think I learned that a sincere apology transfers the responsibility onto the other person to forgive, forget, and accept you back. The truth is that there is no moral or natural law that mandates people forgive, forget and accept after a sincere apology, nor would I want there to be.

All of this may seem obvious in hindsight, but this is my blog after all. I can write about the trivial as if they were life-altering revelations.

The finite, bounded interface trumps the infinite, unrestricted interface

31 January 2023

Me, back in October 2020:

It struck me that today’s AI assistants (Alexa, Google Assistant, Siri) are all based around having conversations. If these systems ever approached anything close to human intelligence and common-sense, perhaps having a conversation is the best way to interact with AI…maybe the best way to interact with artificial intelligence is the same way we interact with other people — using conversations.

Austin Henley, in a lovely blog post from a few days ago:

A great user interface guides me and offers nudges.

Couldn’t a natural language interface help with that?

Certainly.

But not as the only option. Probably not even the main interface.

My first thought after reading this was Why didn’t I think of that?! Everything Austin says about software and user interface design I (sort of) knew 2 years ago. People know what they want — but they can rarely express it well, especially using words. Relying solely on natural language to gauge user intent is lazy, and will lead to poorly designed software.

A brief digression to put down my perspective on software and UI design: I believe that self-imposed constraints and strong opinions (weakly held) are good and necessary towards building well-designed software. This path will lead to your product not appealing to everyone, but that’s okay! My favorite apps are opinionated and have relatively a small/medium-sized customer base; but that’s all they ever wanted. It always frustrates me when a new service/product clearly has aims to be ‘the next big thing’ from the start. Why do you need millions of users/customers? If you’re one person building something new, you only need thousands.

Circling back: my original post was about the best way to interact with virtual assistants like Siri. Natural language is the only interface for this class of software. They’ve been around for a decade, and while useful (especially for accessibility), they haven’t revolutionized how we do things everyday, nor have they opened up new opportunities, I think. How do we make people successful at using computers using a lazy interface?

I hope I’ll play a role in answering that question one day.

Why so glib?

30 January 2023

Two years ago, I wrote a post right as I started my study of interpersonal biases in language. I don’t remember what my state of mind was when I wrote it, but I do know what it is like right now. If anyone embarking on a Ph.D. is reading this, I’d love for them to know how tortured the journey was to get to where I am. The only idea I had back then was computationally replicate and evaluate the LIB. Now, after many missteps and detours, and a lot of nudging from David & Jessy, I have (I think) a topic that I can call mine; a research program that no-one else has worked on (yet) that I can initiate and contribute to. Generalized (Linguistic) Intergroup Bias is what I’ve termed it.

I feel relieved and optimistic at this juncture in my Ph.D. And yet, the fear is still there, receded in the background. I can envision scenarios where it engulfs me again, but not many. In any case, I will face it when it comes, and only I will remain after it passes.

Will my research program lead anywhere, change anyone’s mind, or even be one that anyone apart from me finds interesting? Probably not, but I’ve come to realize that all that matters is I do the work, do it well, and that I grow during the process. Like programming languages, I’ve come to learn that the research programs that gain traction in a community, especially a relatively young one like Computational Linguistics, have little to do with the program’s promised benefits — external factors like the progenitor’s community and its ease of use play a big role. I can only aim to do the best on what I control — ensuring that I pursue my research questions honestly and rigorously.

Addendum

I was a little too optimistic with giving my own name to my focus of study, but it was good in the end to get pushback on Generalized Intergroup Bias or GIB. I study intergroup bias; how is in-group language different from out-group language? That’s the one sentence summary of my dissertation, and I’m quite happy with it. My own term and acronym would have just made it too confusing.

Disclosure Triangle of Sadness

13 January 2023

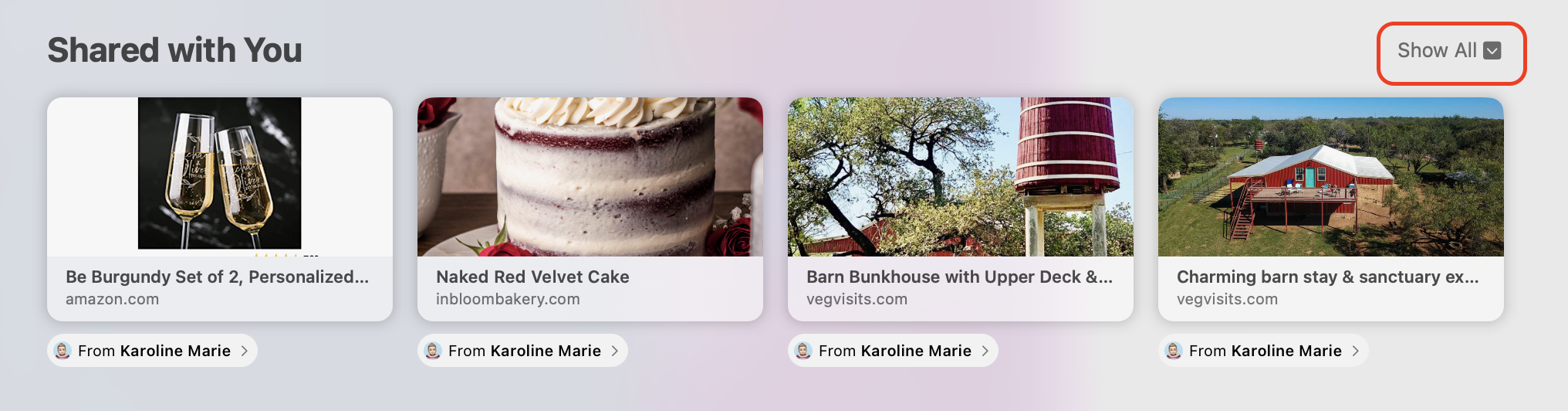

One of the advantages of not being a full-time iOS app developer is that I can spend an unreasonable amount of time on minuscule details of app design. Paying attention to the details is important and a sign that you care — but it’s rarely noticed nor rewarded by others, while shipping something that works is. In any case, sweating the details is a luxury I can afford now, which brings me to a UI design curiosity of Apple’s that sent me down a rabbit hole. Consider the empty tab page on Safari:

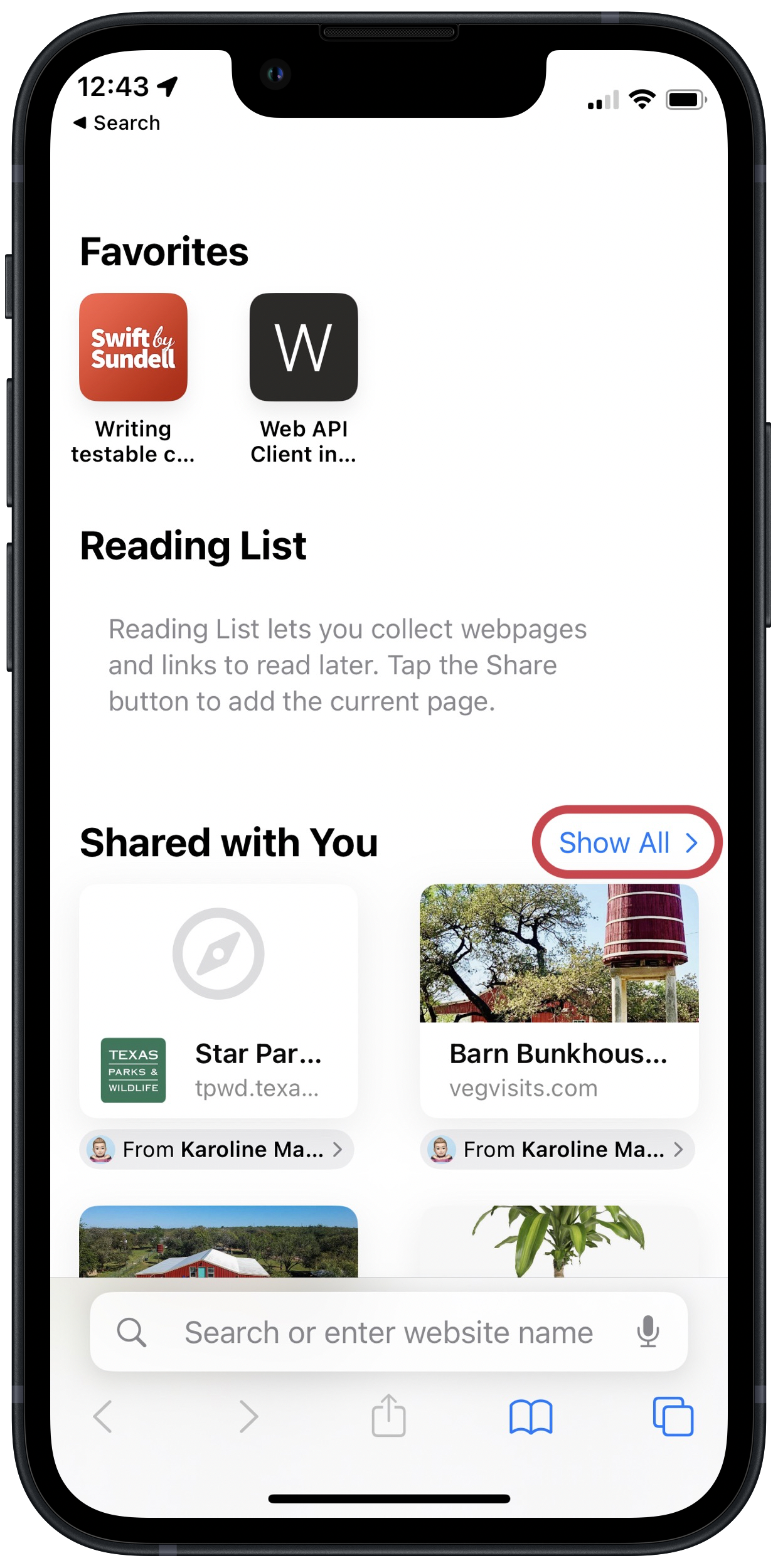

I’ve circled the disclosure control next to the ‘Shared With You’ section that lets you expand and show more items. Now consider the same New Tab page on iOS Safari:

On the Mac, the control for expanding ‘Shared With You’ is a downwards-pointing chevron; it becomes upwards-pointing when you expand it. On iOS, it is downwards-pointing after you expand it, while it points to the side when the section is collapsed.

The first thought that came to mind when I noticed the different direction of the disclosure links was Gruber’s post on what disclosure controls ought to do:

In the iOS/Mac style, a right-pointing chevron (or triangle, depending on the OS) indicates the collapsed state, and a down-pointing chevron indicates the expanded state.

Based on that article, I thought I had found another instance of Apple’s own apps not following the HIG, but that isn’t the case. Based on my reading of Apple’s HIG, this control is a disclosure button on the Mac, but a disclosure link on iOS. Now I have two questions:

- Why do disclosure links show state and imply action while disclosure buttons show action and imply state?

- Why is it a button on the Mac and a link on iOS?

I feel like there ought to be a clear answer to the first one, but the examples in the HIG don’t elucidate the differences to me. Regarding the second question, I think its a mistake by whoever designed it. Disclosure links are all over macOS and they do exactly what this disclosure button in Safari does: expand a list of items to show more. Why should this control be different?